Many people misunderstand bandwidth and network speed and think latency doesn’t matter over fiber. But that’s not how it works. You’ve got a 10Gbps fiber connection shared with a few others and not even coming close to maxing out the total connection bandwidth. But despite that, latency is high and highly variable. The key here is to remember that bandwidth is not the only or even the main QoE factor these days. Let’s dive deeper into networking aspects such as latency, bufferbloat and jitter. Once you understand the matter, minor tweaks can considerably change your network and user experience.

Bandwidth is often the first thing people look at when comparing network connections. But, like you probably didn’t choose your last car solely based on horsepower—or even thought much about it—it’s rarely the most important factor in networking technology.

The truth is that bandwidth has never been a measure of network speed, even though these terms are often (but not correctly!) used interchangeably. It’s like the width of a water pipe—it measures how much data can flow through a network per second. Higher bandwidth lets more data flow at once, but it is only one of the factors affecting overall network performance and speed. A few others are bufferbloat, packet loss, latency and jitter. All of them can degrade network throughput and make a high-capacity link perform like one with less available bandwidth. Users often need less bandwidth than they think, and buying more won’t make Netflix, Fortnite, YouTube, or regular web pages load any faster.

An end-to-end network path usually consists of multiple link connections, each with a different bandwidth capacity. As a result, a link with the lowest bandwidth is frequently described as the bottleneck because it can limit the overall capacity of all connections in the path. The time between the start and end of each signal transmission adds latency over an individual link. In fact, loss, latency, jitter and out-of-order delivery are the four quality criteria for bandwidth.

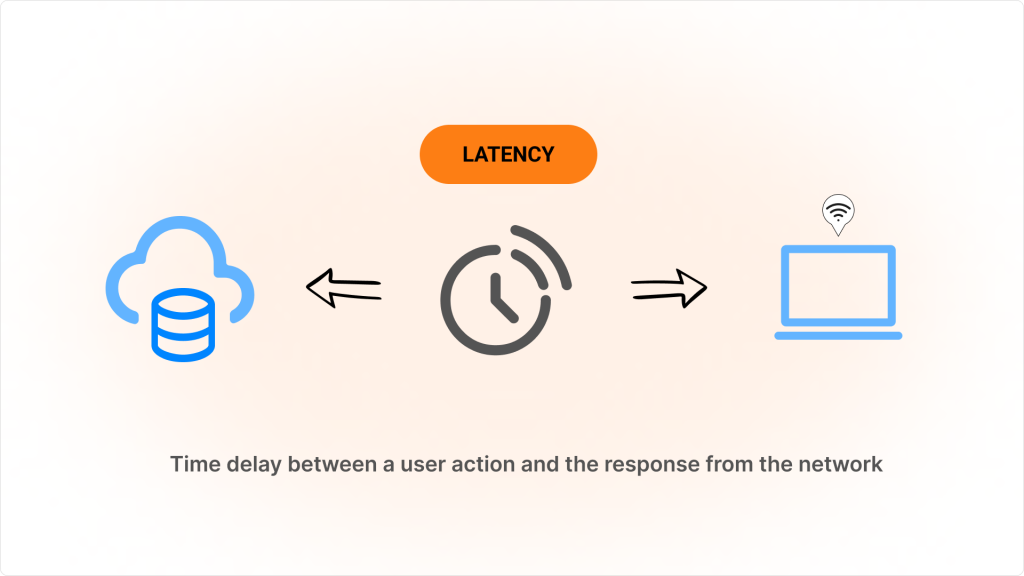

If bandwidth is the amount of information sent per second, latency is nothing but time a data packet travels from one point to another across a network. It’s basically a lag/ delay you experience while waiting for something to load. And it’s typically measured in milliseconds (ms).

What causes latency? Typically, there are two main factors: congestion and/or distance. A substantial portion of overall latency can be attributed to the “last mile,” which is the final leg of the network that connects end users to the internet provider’s infrastructure. While specific percentages can vary, it’s not uncommon that last-mile latency can be a significant part of the total delay users experience (often 30% or more) of overall latency in residential settings, especially where infrastructure is outdated or the connections are oversubscribed. Fiber optic networks generally have lower latency compared to copper-based technologies like DSL or cable.

Understanding and addressing last-mile latency can be crucial for anyone looking to improve their internet performance. This often requires solutions like lower-latency connections or ISP-managed services prioritizing stable latency rather than just increasing raw bandwidth, especially in real-time communications and systems requiring quick response time.

Network congestion is another common cause of network latency, and here bufferbloat and jitter come into play.

Do your video or audio calls sometimes stutter? Do you experience a lag in video games, or does your web browsing slow down? If so, bufferbloat may be to blame.

Internet traffic travels over diverse paths with varying times and bandwidth. To deal with these traffic flow variations and bursts, we use buffers as temporary storage areas. They are everywhere: in routers, NICs, and network boundaries. When someone sends a large file, many packets are sent at once, so the router temporarily holds all the packets in a queue, causing new packets to wait until the others go through.

Bufferbloat is a software issue with networking equipment (like a router or switch). It occurs when all the incoming packets cannot be processed (buffers fill and become “bloated”). That causes spikes in your Internet connection’s latency when a device on the network uploads or downloads files. The most straightforward analogy here is sink (your router) and narrow drain (your connection to the ISP, since it’s probably the slowest link in your network). That is what happens when a flow uses more than its fair share of the bottleneck. This might be very familiar to those often stuck in traffic jams in big cities or in line at the grocery store.

And while packets travel through today’s statistically multiplexed networks, timely packet loss is natural. When packets are dropped, TCP flow control methods manage network congestion. With large buffers, packets enter instead of being dropped and bufferbloat prevents TCP from controlling flow. This can have detrimental results, including decreased throughput and jitter.

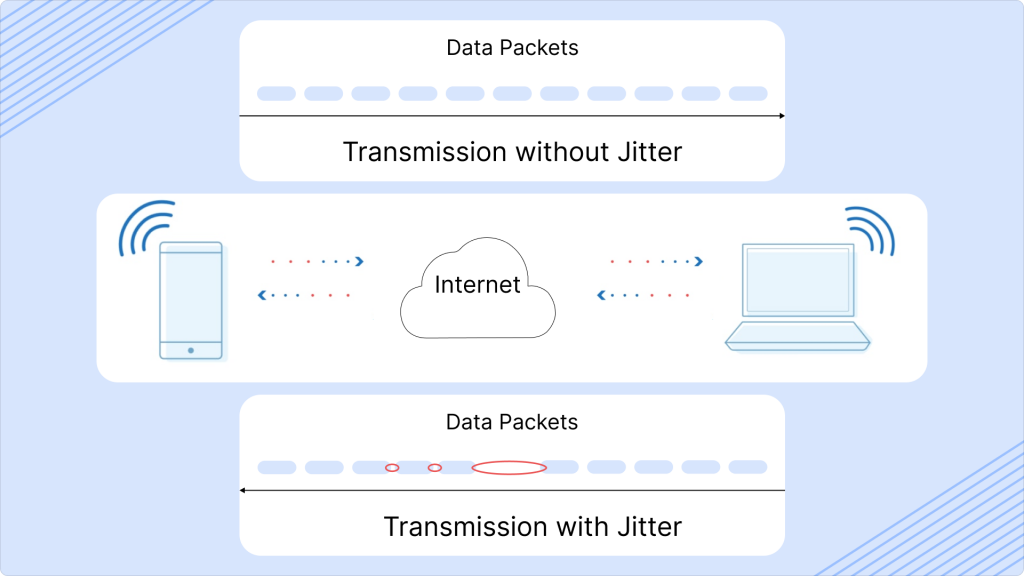

A jitter is a submeasurement of latency (variation in latency over time) that reflects the difference between delays in the transmission series of packets through a network over time. It indicates how stable this connection is.

As mentioned earlier, high latency, bufferbloat, and jitter all lead to delays and interruptions in network performance. Bufferbloat can increase latency, and high or inconsistent latency leads to jitter, which impacts the overall smoothness and speed of your online experience. Jitter usually disrupts VoIP calls, streaming, and online gaming by causing choppy audio, video issues, or lag. In severe cases, high jitter can lead to packet loss (when packets arrive too late to be useful), which results in errors, missing data, or the need to reload pages. Even ordinary web browsing may feel choppy or inconsistent.

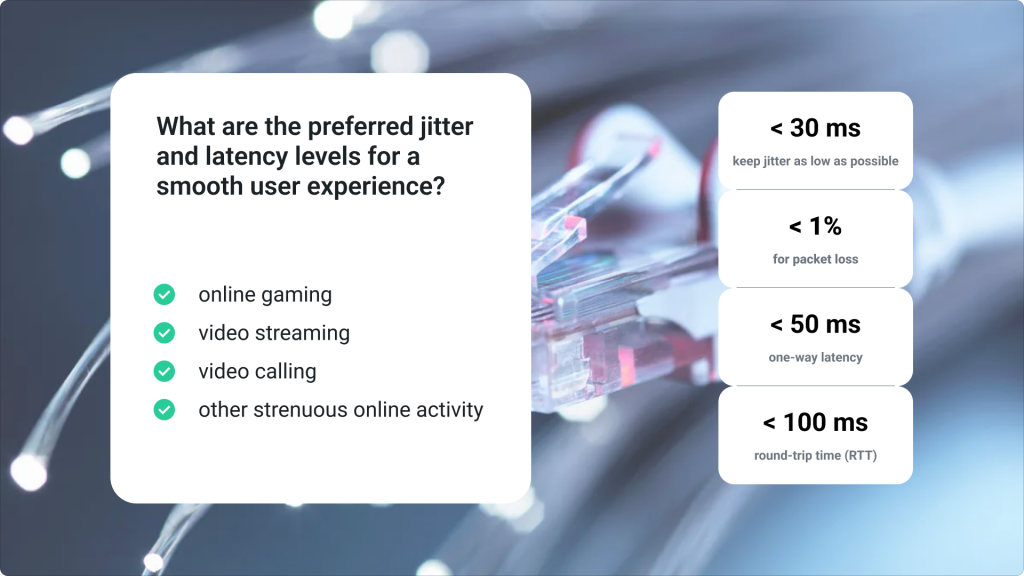

Buying a faster connection when link speed isn’t the problem wastes time and money. If your router buffers more data than necessary, this adds delay that can never be cured by faster transmission rates. So instead, aim to keep jitter as low as possible, ideally under 30 ms. As for latency, 150 ms one-way and 300 ms round-trip time (RTT) should be the upper limit to ensure optimal connection (though an RTT below 100 ms is generally preferred for a smooth experience).

It’s no exaggeration to say that Bufferbloat is ISPs biggest enemy. So, what can you do about this? Many effective diagnostic and monitoring tools mitigate these harmful effects on network performance.

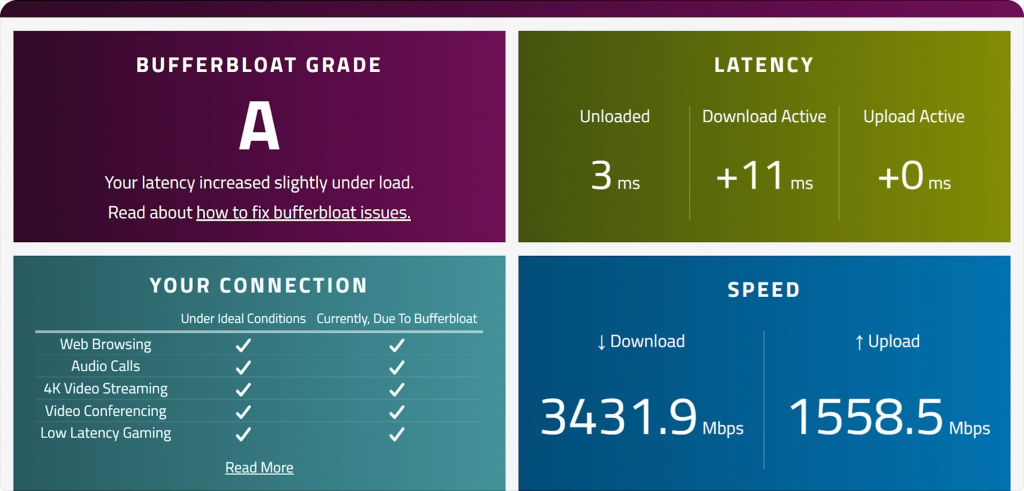

If latency increase caused by other traffic is small (less than 20-30 msec), bufferbloat is well under control. This can be checked quickly and easily by web-based tests such as Waveform Bufferbloat, Speedtest, Cloudflare, Fast.com or any other solution. Only make sure you’re connected over Ethernet cable, not over WiFi (significantly distorts results).

The quickest route to addressing bufferbloat, latency and jitter at the ISP level is to implement a Quality of Experience (QoE) middlebox. These middleboxes are designed to manage network traffic in ways that prioritize time-sensitive packets, ensuring smoother network performance even during high-traffic periods. By leveraging Smart Queue Management (SQM), which can also be called Active Queue Management (AQM), in QoE middleboxes, ISPs, WISPs, and FISPs worldwide can help mitigate the impact of bufferbloat and maintain stable latency alongside jitter levels. There are many different versions of SQM, and some are more effective than others. Popular algorithms for SQM include CAKE, FQ-CoDel, and PIE (all three are open-source), each optimizing packet flow to reduce congestion and improve the end-user experience.

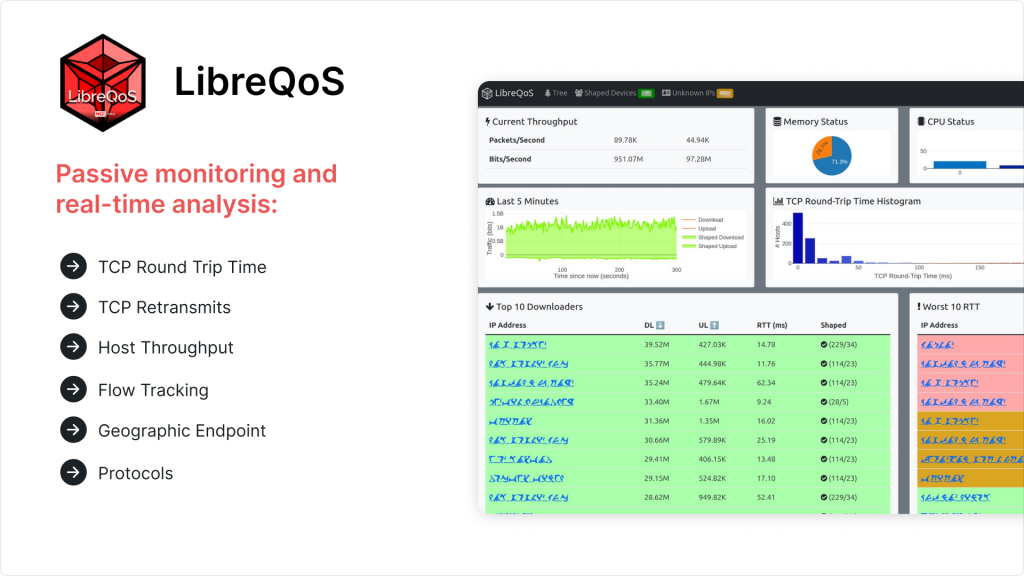

QoE middleboxes with SQM implementation tailored for ISPs include solutions like LibreQoS, Preseem, Bequant, Paraqum Technologies, Cambium Networks and Sandvine. For example, LibreQoS project stands out for its open-source approach to latency management across varied network environments without complex queuing and traffic shaping mechanics. It improves QoE by implementing Flow Queueing (FQ) and Active Queue Management (AQM) algorithms. Its open-source nature is particularly valuable for ISPs with diverse network setups, allowing flexible integration with various equipment like Ubiquiti CPEs and MikroTik routers. With custom shaping profiles, it effectively manages peak load and limits disruptions from high-bandwidth activities like speed tests.

Additionally, the effectiveness of such tools in real-world scenarios is demonstrated in the CTN Malawi case, where LibreQoS seamless integration provided burst speeds when needed, improving user experience without significantly increasing overall throughput. This approach also strengthened customer loyalty, as subscribers became advocates for the service on social media. For a closer look, check the video below with our Client Relationship Manager, Heather Lombard, and CEO & Co-Founder of CTN, Brian Longwe.

How to build a strong ISP foundation with Splynx and LibreQoS | CTN Malawi’s Success Story

Latency is more noticeable over greater distances; we can’t defeat the laws of physics. This explains why cloud content delivery networks (CDNs) are spread worldwide and data is replicated between data centers, so users can access services closest to their geolocation for faster content delivery.

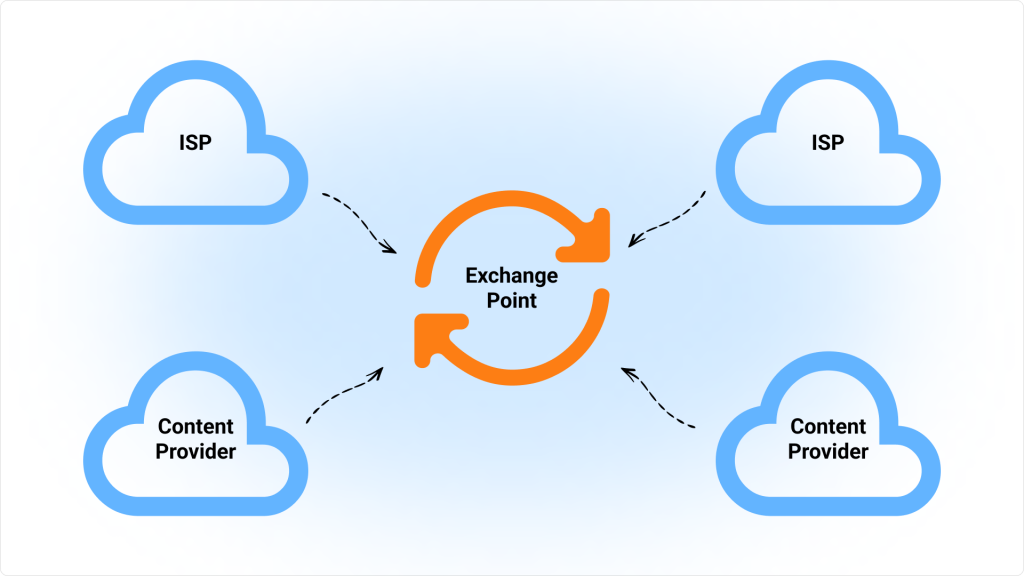

So, in addition to contributing to last-mile latency and network congestion efforts to improve QoE for users, we need more local IXPs. IXPs are physical infrastructure through which CDNs and ISPs exchange internet traffic between their networks. Think of them as the building blocks around which the internet is built.

Bill Woodcock of Packet Clearing House explained how, where, why, and when an ISP should build IXPs (short answer: to get up to 45% of the traffic for free) at one of their recent workshops. Here are some main points from it:

You can view it in full at the link.

Find out how Splynx helps ISPs grow

Learn more